Building an Audiovisual Synth #3: Architecture and First Prototype

This one is about sharing data between threads, deciding on an architecture, and building the first prototype.

My original plan was to first learn the foundations of Desktop UI and then do the same for embedded systems. While I ditched the latter completely, the desktop UI also did not go as planned.

Building Desktop UIs with Egui

I started by learning the foundations of egui, a framework for building UIs in Rust. Compared to other frameworks like Iced and Tauri, it looked quite easy, and getting basic elements in a window was indeed quite simple. I also connected it to fundsp and was able to control some shared parameters, which was great.

However, the next two steps turned out to be quite difficult:

Drawing the shader next to the UI in the same window.

Adding a second OS window to show the shader standalone on a separate screen.

In fact, it was so challenging that I gave up on this after a while. Also, having a Desktop UI was not part of the initial plan. I just did it out of curiosity.

New Rendering Backend with Miniquad

During the process of trying to connect the shader with egui, I was also overwhelmed by the complexity of wgpu. So I searched for something simpler, which turned out to exist and is called miniquad.

Miniquad is cross-platform, based on OpenGL, and focuses on low-spec devices, which is perfect for the Raspberry Pi. It is also way easier to set up and connect uniforms to shaders that are written in GLSL, which makes it even better for my use case.

Restructuring Code into Modules

Having solved that part, I was still in the egui integration step and ran into some dependency issues. This led me to restructure my code and split it up into different modules, which helped quite a lot as the program was getting larger.

Sharing Data Between Threads

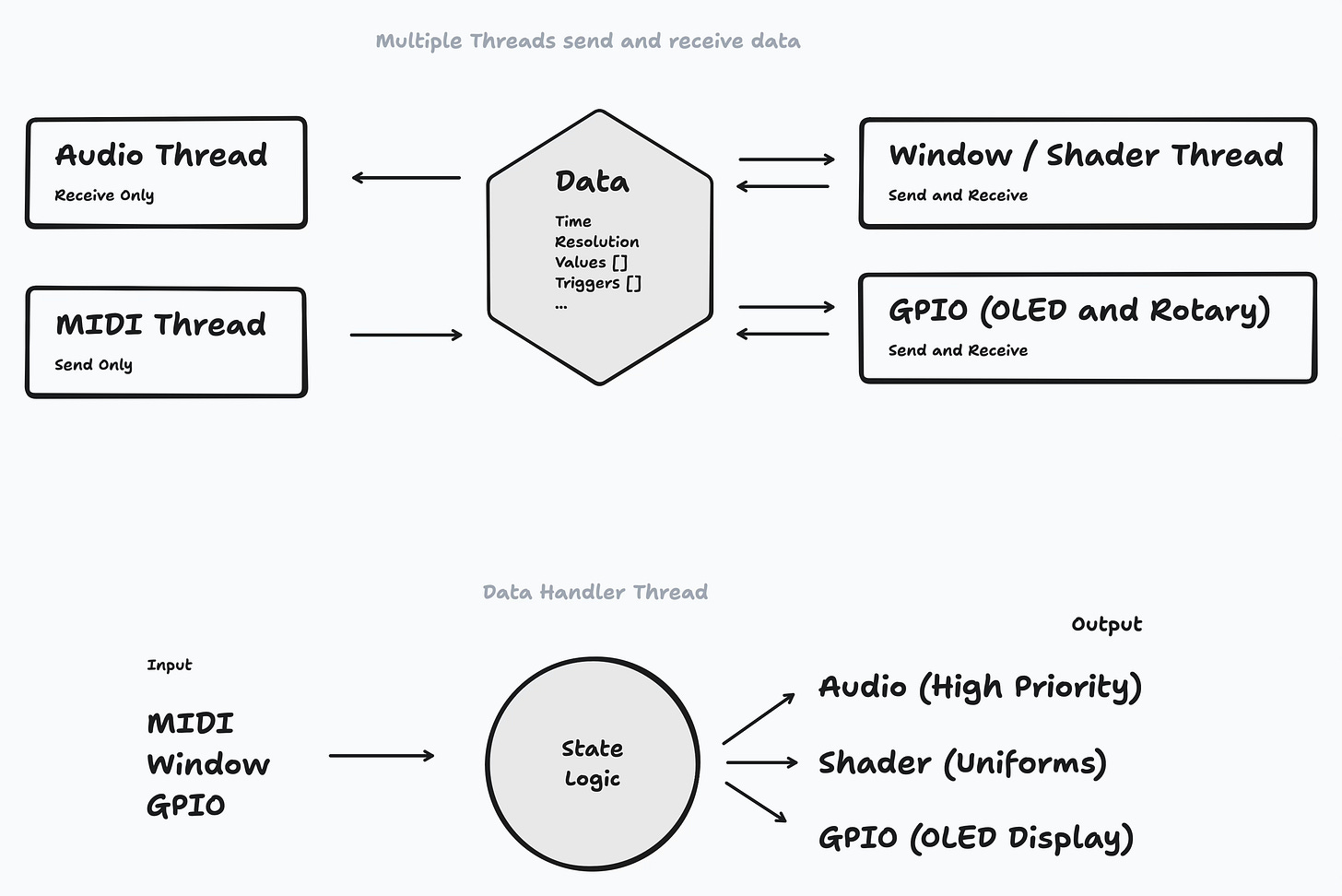

Each module was running in a separate thread, so the big question was how to get data in and out of them. I researched approaches like Arc Mutex, MPSC, ring buffers, Crossbeam channels, and triple buffers, to name a few.

This ended up in a new (or, to be honest, my first) architectural decision, which was to use a coordinator pattern. In this pattern, all sharable data is defined in a struct, and there is a dedicated coordinator thread. This thread is responsible for receiving and sending this data from and to other threads.

The way data is sent or received can be different for each thread. For example, the MIDI thread uses a Crossbeam channel to send messages to the coordinator, which then get parsed and update the state. The audio thread uses a triple buffer to get the current state from the coordinator.

First Working Prototype

This all took quite a while and was a bit intense, but it now seems to work and is the basis for my first prototype.

It has the following features:

Runs on Mac and Raspberry Pi.

Has shared state (4 CV, 4 Gate).

Visuals and Audio are controlled by the same state.

The state can be modified with the Mouse, Keyboard, and MIDI.

Shaders are auto-detected from a folder and can be swapped at runtime.

Some details (High-DPI, fullscreen, auto MIDI device detection).

Challenges with MIDI Latency

But of course, there are also some things that don't work. In particular, I am experiencing quite high latency for MIDI inputs on the Raspberry Pi. On the Mac, this is not an issue, so I suspect it might be related to hardware since the mouse input is working fine on the Pi as well.

Options for Adjusting the Project Path

While building this first prototype, I noticed a few things that might affect the final outcome of the project.

Creating a meaningful connection between audio and visuals is quite hard. Next to shared parameters, I was researching wave terrain synthesis and considered using the shader output as an oscillator source. But this would still be a lot of work, and I am not sure if the Pi can handle this.

Also, I was thinking about building different audio engines that people can select from. This would be simpler, but the benefit is still questionable.

Writing DSP code to generate sound is quite complicated. It would be very nice to have something similar to GLSL to describe sound creation. I think Faust is very close, but it seems very hard to integrate.

Do I really need a dedicated screen and inputs on the Pi? Having a MIDI input is quite universal. It offers a very flexible option to integrate the device into existing workflows. From a learning perspective, it is a good thing to create it, but maybe in a different way.

I really like modulating parameters based on time and avoiding the use of explicit triggers. Maybe it would be nice to build a device that can do that and send the data over MIDI or something with more resolution. This would make up a nice parallel project to learn embedded Rust as well.

Next Steps

As you can see, there are many ways to go, and I need to decide on which one. The next step would still be to learn how to use an embedded display since this will be needed in both situations.