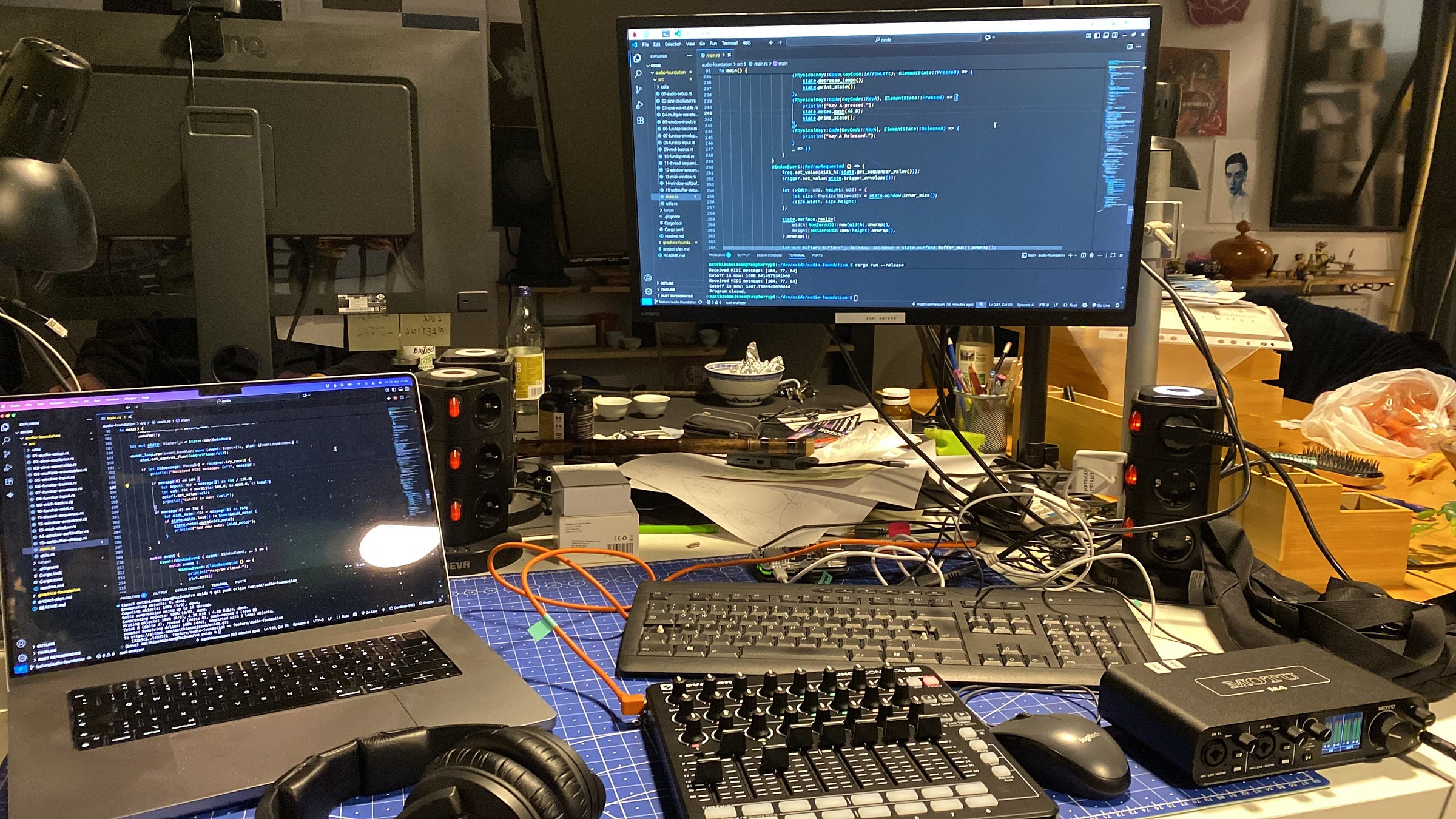

Building an Audiovisual Synth #2: The Audio Foundation

Setting up a DSP graph, connecting it to the audio output and controlling its parameters with mouse, keyboard and MIDI input.

After exploring the basics of the visual side of things, I went into the audio part. In short, audio is complex and requires precise timings and techniques to share data between threads.

Overview

Connecting to Hardware with cpal

The first thing was to get a connection to the output devices (like speakers) of the system. To do this, there is a library called cpal.

It sets up a host, which connects to an output device which then is provided with samples in an audio stream. This audio stream is key; it requests an exact amount of samples, and we need to deliver it on time.

Creating samples for the buffer with fundsp

To create samples, you can use a DSP library like fundsp, which has a lot of things included. It has a lot of basic nodes like oscillators, filters and effects, which you can easily connect to produce an audio graph.

One thing that is very nice is the envelope node. It lets you calculate any signal based on time and then feed it into whatever you want. It also has a feature to create variables that can be shared across threads, which is really important. And all this happens in a separate, high-priority audio thread.

Controlling parameters with mouse and keyboard

Like in the graphics part, we can use winit to display a window for user input. The main part here happens in an event_loop(), where we can react to events from the mouse or keyboard. This happens in a separate thread that runs independently from the audio. Based on those events, we then call var.set_value() to update a shared value to control synth parameters.

Note on the PI

You need to draw something in that window; otherwise, it won't be created on the Raspberry Pi.

Controlling parameters with MIDI

Another way to control parameters is through a MIDI controller. To do that, there is a library called midir. This sets up a host and lets you choose MIDI devices to connect.

The MIDI connection also needs to run in a separate thread. You have to set this up yourself with std::thread::spawn(move || { });

Inside that closure, your connection setup and MIDI message reading happen. In order to keep the thread running, you need a loop that is called over and over again with a little delay inside.

To get the MIDI data out again, you can use an MPSC (multi-producer, single-consumer) channel. Whenever a MIDI message comes in, this sends it to the main (window) thread, which in turn deciphers it and updates a shared variable from the audio thread.

This works on a fast machine but is a very bad pattern.

First, the MPSC channel is not the best tool for sharing time-critical data.

Second, and even worse, is that the window loop is the one that determines the timing when variables should be updated.

Learnings

Decoupling Audio from the Main Thread

In my case, I was trying to build an arpeggiator. The trigger and frequency were sent by the window thread on each call. The key was a phasor built by taking a continuous time and using modulo 1 to wrap after a certain time.

This gave me a number going from 0 to 1 at a fixed interval. Based on this, I changed the frequency by looping through a Vector of Notes. And also triggered the ADSR in the synth.

This worked totally fine on a fast machine (around 120 fps), but on the Raspberry Pi, it sounded weird. This is because at lower frame rates, this changing of notes and triggers is happening not regularly.

It also does not on a faster machine, but there you heard it quite significantly. So this is a problem I had and was not able to fix. There needs to be a solution to have a global shared state and calculate time-based events that can be changed from window or MIDI events without affecting others.

Audio is complicated

My initial idea was to compile code from FAUST and just use it in my program. It turned out that while it can compile to Rust, the code is not usable for me. Building a solid way of delivering samples to the audio stream is a complex topic.

Open Topics

Fix parameter change issue: Search for a better pattern to update shared parameters that is not dependent on other threads.

Adjust the synth engine to sound more interesting.

Consider ways of sequencing and how to interact with it.

Next Steps

After setting up graphics and audio, my next step will be to create the user interface. This can be done by adding a UI to the window or by controlling a small display attached to the Raspberry Pi. Since both ways are interesting, I will combine them.

Setup Desktop UI and show some text, sliders, and a select box.

Setup Embedded Screen and show some text.

While there are many more things to do, lets focus on that part first.